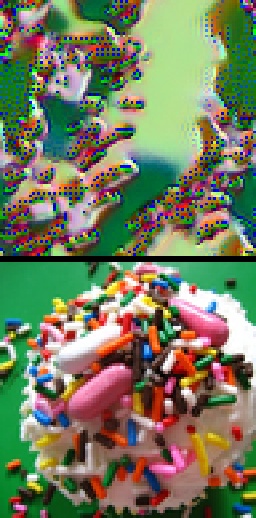

Rutt-Etra-Izer is a WebGL emulation of the classic Rutt-Etra video synthesizer. This demo replicates the Z-displacement, scanned-line look of the original, but does not attempt to replicate it’s full feature set.

The demo allows you to drag and drop your own images, manipulate them and save the output. Images are generated by scanning the pixels of the input image from top to bottom, with scan-line separated by the ‘Line Separation’ amount. For each line generated, the z-position of the vertices is dependent on the brightness of the pixels.

20 alternative interfaces for creating and editing images and text

https://github.com/constraint-systems

Flow

An experimental image editor that lets you set and direct pixel-flows.

Fracture

Shatter and recombine images using a grid of viewports.

Tri

Tri is an experimental image distorter. You can choose an image to render using a WebGL quad, adjust the texture and position coordinates to create different distortions, and save the result.

Tile

Layout images using a tiling tree layout. Move, split, and resize images using keyboard controls.

Sift

Slice an image into multiple layers. You can offset the slices to create interference patterns and pseudo-3D effects.

Automadraw

Draw and evolve your drawing using cellular automata on a pixel grid with two keyboard-controlled cursors.

Span

Lay out and rearrange text, line by line, using keyboard controls.

Stamp

Image-paint from a source image palette using keyboard controls.

Collapse

Collapse an image into itself using ranked superpixels.

Res

Selectively pixelate an image using a compression algorithm.

Rgb

Pixel-paint using keyboard controls.

Face

Edit both the text and the font it is rendered in.

Pal

Apply an eight-color terminal color scheme to an image. Use the keyboard controls to choose a theme, set thresholds, and cycle hues.

Bix

Draw on binary to glitch text.

Diptych

Pixel-reflow an image to match the dimensions of your text. Save the result as a diptych.

Slide

Divide and slide-stretch an image using keyboard controls.

Freeconfig

Push around image pixels in blocks.

Moire

Generate angular skyscapes using Asteroids-like ship controls.

Hex

A keyboard-driven, grid-based drawing tool.

Etch

A keyboard-based pixel drawing tool.

About

Constraint Systems is a collection of experimental web-based creative tools. They are an ongoing attempt to explore alternative ways of interacting with pixels and text on a computer screen. I hope to someday build these ideas into something larger, but the plan for now is to keep the scopes small and the releases quick.

New Art City’s mission is to develop an accessible toolkit for building virtual installations that show born-digital artifacts alongside digitized works of traditional media.

Our curation and product design prioritize those who are disadvantaged by structural injustice. An inclusive and redistributive community is as important to our project as the toolkit itself.

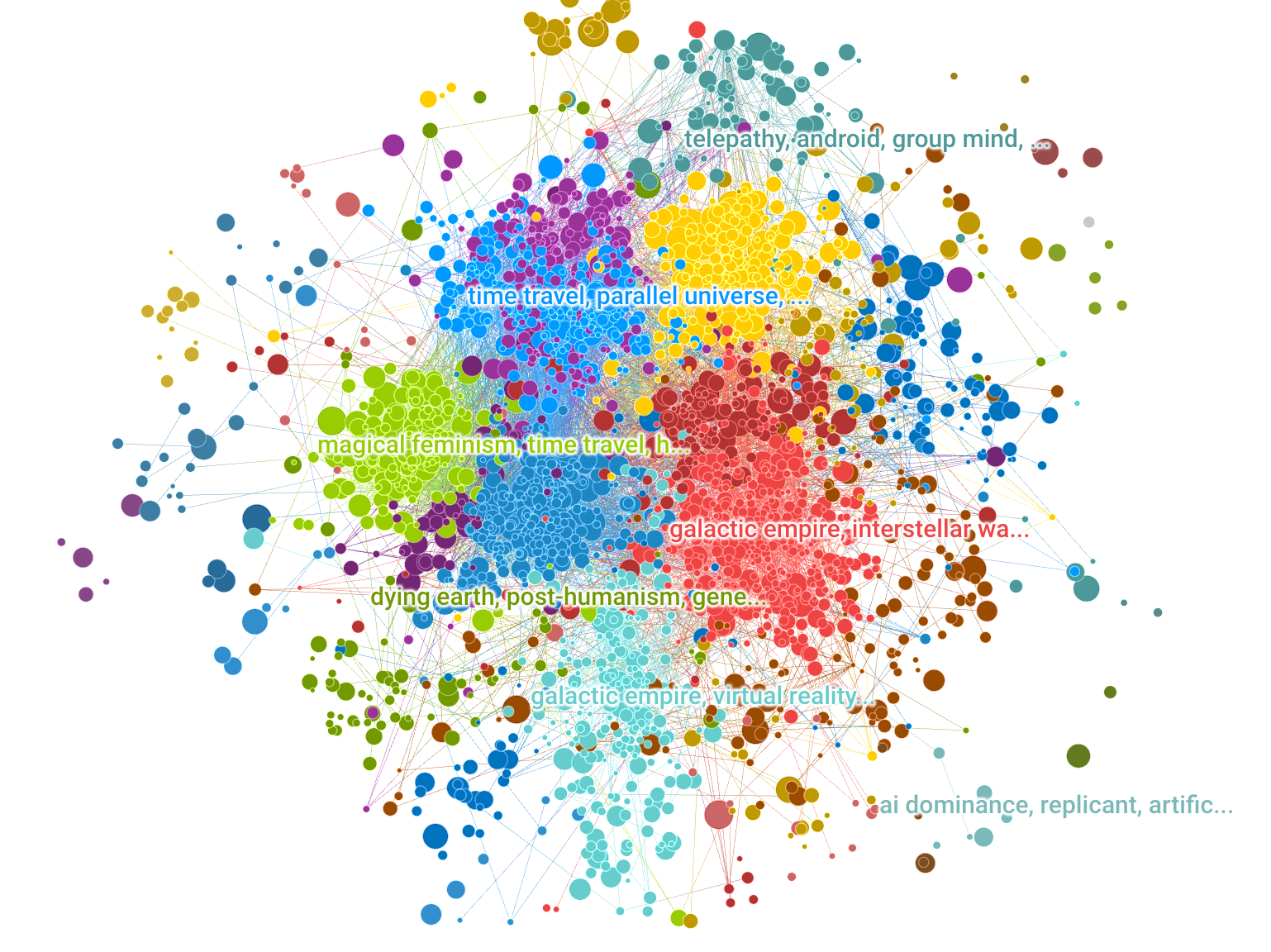

All Sci Fi novels published since 1900 were scraped from Good Reads, and for each novel, all reader comments, plot descriptions, and user-generated tags were compiled. Keywords and concepts were added to each novel by parsing the text described above and mapping them to a curated dictionary of SciFi keywords and concepts. 2,633 novels published since 1990 had at least 50 reviews and contained at least one keyword from the keyword corpus.

After keyword enhancement, a book network was created by linking novels if they share similar keyword. Network clusters identify Keyword Themes - or groups of similar books that are labeled most commonly shared keywords in the group.

The network was generated using the open source python 'tag2network' package, and visualized here using 'openmappr''. The scripts for analyzing this dataset are available at https://github.com/ericberlow/SciFi

This is a collaborative project of Bethanie Maples, Srini Kadamati, and Eric Berlow

NOTE - This visualization performs best in Chrome and Safari browsers full screen - and is not optimized for the small screens of mobile devices.

How to Navigate this Network:

Click on any node to to see more details about it.

Click the whitespace or 'Reset' button to clear any selection.

Use the Snapshots panel to navigate between views.

Use the Filters panel to select nodes by any combination of attributes.

Click the 'Subset' button to restrict the data to the selected nodes - The Filters panel will then show a summary of that subset.

Use the List panel to see a sortable list of any nodes selected or subset. You can also browse their details one by one by clicking on them in the list.

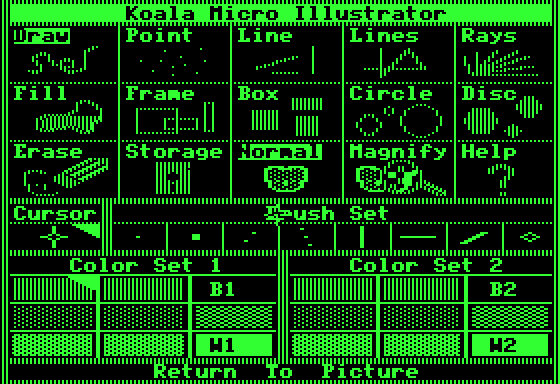

This timeline is the result of researching the origins of digital paint and draw software, and the tools that were developed to allow for hand manipulation (versus plotter drawn) drawing and painting - the mouse, light pen & drawing tablet. If we look at the software that has become commonplace today (such as adobe photoshop), which allows for painting, animation and photo manipulation in one, we can trace the roots of this software to the University and Corporate Labs that housed large computers with advanced capabilities for their time - MIT Lincoln Labs & Radiation Labs, DARPA & the Augmented Research Centre (ARC), Bell Labs, NYIT’s Computer Graphics Lab, Xerox Palo Alto Research Centre (Xerox PARC), NASA’s Jet Propulsion Labs (JPL). The artistic collaborations that grew out of these labs fueled the advent of Computer Graphics, Computer Art and Video Art from the 1960's to the 1990's.

This visual timeline starts by tracing the paint systems, frame buffers, and graphic user interfaces created out of these labs, with a focus on the first paint/draw software and the various drawing tools. I am interested in how the larger corporate, and often Military Funded laboratories, effected the dawn of the personal computer and the introduction of the personal computer to the home. This timeline continues through the 1980’s, with a focus on the software and hardware that was developed for the home market from late 1970's to the 1990's.

EmotiBit is a wearable sensor module for capturing high-quality emotional, physiological, and movement data. Easy-to-use and scientifically-validated sensing lets you enjoy wireless data streaming to any platform or direct data recording to the built-in SD card. Customize the Arduino-compatible hardware and fully open-source software to meet any project needs!

OPENRNDR is a tool to create tools. It is an open source framework for creative coding, written in Kotlin for the Java VM that simplifies writing real-time interactive software. It fully embraces its existing infrastructure of (open source) libraries, editors, debuggers and build tools. It is designed and developed for prototyping as well as the development of robust performant visual and interactive applications. It is not an application, it is a collection of software components that aid the creation of applications.

Key features

a light weight application framework to quickly get you started

a fast OpenGL 3.3 backed drawer written using the LWJGL OpenGL bindings

a set of minimal and clean APIs that welcome programming in a modern style

an extensive shape drawing and manipulation API

asynchronous image loading

runs on Windows, MacOS and LinuxEcosystem

Applications written in OPENRNDR can communicate with third-party tools and services, either using OPENRNDR’s functionality or via third-party Java libraries.

Existing use cases involve connectivity with devices such as Arduino, Philips Kinet, Microsoft Kinect 2.0, RealSense, DMX, ARTNet and Midi devices; applications that communicate through OpenSoundControl; services such as weather reports and Twitter. If you want to experiment with Machine Learning, try RunwayML that comes with an OPENRNDR integration.

A platform for interactive spaces, interactive environments, interactive objects and prototyping.

tramontana leverages the capabilities of the object that we have all come to carry with us anywhere, all the time, our smartphones. With libraries for Processing, Javascript and openFrameworks you can access the inputs and outputs of one or more smartphones to easily and quickly prototype interactive spaces, connected products or just something you’d like to be wireless. What used to involve complex tasks like networking, native app development, etc. can now be created with a single sketch on your computer.

All The Tropes is a community-edited wiki website dedicated to discussing Creators, Works, and Tropes -- the people, projects and patterns of creative writing in all kinds of entertainment: television, literature, movies, video games, and more.

Hello my dear friend,

I found a strange game on my computer a few days ago. I have tried to find out how it works but have failed in all my attempts. I hope you have more luck than I have. Please tell me if you can figure it out, because I think it's driving me C̷̖̆͊R̸̢̮̍Å̵̘̪̚Z̴͔̲̈́Ỳ̸̒ͅ. I'm sure the guide holds the key to solving it, but if not, you can always have some fun with the sinuous movements of the strange creatures that live in this game. I hope you enjoy it.

With love,

@beleitax

Can I use the art I find here? How should I credit the artist?

Yes, you can use any of the art submitted to this site. Even in commercial projects. Just be sure to adhere to the license terms. Artists often indicate how they would like to be credited in the "Copyright/Attribution Notice:" section of the submission. You can find this between the submission's description and the list of downloadable files. If no Copyright/Attribution Notice instructions are given, a good way to credit an author for any asset is to put the following text in your game's credits file and on your game's credits screen:

"[asset name]" by [author name] licensed [license(s)]: [asset url]For example:

"Whispers of Avalon: Grassland Tileset" by Leonard Pabin licensed CC-BY 3.0, GPL 2.0, or GPL 3.0: https://opengameart.org/node/3009OpenGameArt Search + Reverse Image Search

Hint: Start search term with http(s):// for reverse image search.

The Collections database consists of entries for more than 480,000 works in the Musée du Louvre and Musée National Eugène-Delacroix. Updated on a daily basis, it is the result of the continuous research and documentation efforts carried out by teams of experts from both museums.

🌑😄🌑🌑🌑🌑🌑🌑🌑🌑

🌑🌑🌑🌑🌑🌑🌑🌑🌑🌑

🌑🌑🌑🌓🌗🌓🌗🌑😄🌑

🌑🌑🌑🌓🌗🌓🌗🌑🌑🌑

🌑🌑🌑🌓🌕🌕🌗🌑🌑🌑

🌑😄🌑🌓🌗🌓🌗🌑🌑🌑

🌑🌑🌑🌓🌗🌓🌗🌑🌑🌑

🌑🌑🌑🌑🌑🌑🌑🌑🌑🌑

🌑🌑🌑🌑🌓🌗🌑🌑🌑🌑

🌑🌑🌑🌑🌓🌗🌑🌑😄🌑

🌑🌑🌑🌑🌓🌗🌑🌑🌑🌑

🌑😄🌑🌑🌓🌗🌑🌑🌑🌑

🌑🌑🌑🌑🌓🌗🌑🌑🌑🌑

🌑🌑🌑🌑🌑🌑🌑🌑🌑🌑

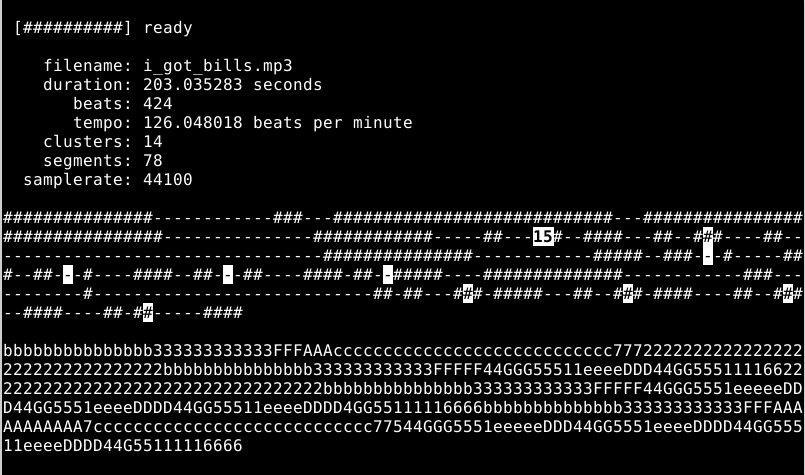

Creates an infinite remix of an audio file by finding musically similar beats and computing a randomized play path through them. The default choices should be suitable for a variety of musical styles. This work is inspired by the Infinite Jukebox (http://www.infinitejuke.com) project creaeted by Paul Lamere

It groups musically similar beats of a song into clusters and then plays a random path through the song that makes musical sense, but not does not repeat. It will do this infinitely.

What is Unmixer?

Unmixer does two things: first, it lets you extract loops from any song; second, it lets you remix these loops in a simple interface.

How do I use it?

Upload a song: drag and drop an audio file (MP3, MP4) into the box with the dotted line

Wait for results: processing a new song can take several minutes.

Remix the loops: turn each sound on and off by clicking the box.

Upload more songs: you can mash-up sounds from as many songs as you like.Extra features:

The BPM indicator in the top right lets you choose the global tempo.

The download button allows you to save a zipfile of all the loops, along with a map of where they occur in the piece.

Neural Cellular Automata (NCA We use NCA to refer to both Neural Cellular Automata and Neural Cellular Automaton.) are capable of learning a diverse set of behaviours: from generating stable, regenerating, static images , to segmenting images , to learning to “self-classify” shapes . The inductive bias imposed by using cellular automata is powerful. A system of individual agents running the same learned local rule can solve surprisingly complex tasks. Moreover, individual agents, or cells, can learn to coordinate their behavior even when separated by large distances. By construction, they solve these tasks in a massively parallel and inherently degenerate Degenerate in this case refers to the biological concept of degeneracy. way. Each cell must be able to take on the role of any other cell - as a result they tend to generalize well to unseen situations.

In this work, we apply NCA to the task of texture synthesis. This task involves reproducing the general appearance of a texture template, as opposed to making pixel-perfect copies. We are going to focus on texture losses that allow for a degree of ambiguity. After training NCA models to reproduce textures, we subsequently investigate their learned behaviors and observe a few surprising effects. Starting from these investigations, we make the case that the cells learn distributed, local, algorithms.

To do this, we apply an old trick: we employ neural cellular automata as a differentiable image parameterization .

Simon Popper, Ulysses, [a reinterpretation of James Joyce’s Ulysses (1922) rearranging all the words in the original book in alphabetical order], Privately Printed by Die Keure, Brugge/Bruges, 2006, Limited Edition of 1000 [Motto Books, Geneva]

Welcome to the Historical Dictionary of Science Fiction. This work-in-progress is a comprehensive quotation-based dictionary of the language of science fiction. The HD/SF is an offshoot of a project begun by the Oxford English Dictionary (though it is no longer formally affiliated with it). It is edited by Jesse Sheidlower.

Welcome to Smithsonian Open Access, where you can download, share, and reuse millions of the Smithsonian’s images—right now, without asking. With new platforms and tools, you have easier access to more than 3 million 2D and 3D digital items from our collections—with many more to come. This includes images and data from across the Smithsonian’s 19 museums, nine research centers, libraries, archives, and the National Zoo.

What will you create?

The Standard Ebooks project is a volunteer driven, not-for-profit effort to produce a collection of high quality, carefully formatted, accessible, open source, and free public domain ebooks that meet or exceed the quality of commercially produced ebooks. The text and cover art in our ebooks is already believed to be in the public domain, and Standard Ebooks dedicates its own work to the public domain, thus releasing the entirety of each ebook file into the public domain. All the ebooks we produce are distributed free of cost and free of U.S. copyright restrictions.

The Hidden Palace is a community dedicated to the preservation of video game development media

Enter the Open Library Explorer, Cami’s new experiment for browsing more than 4 million books in the Internet Archive’s Open Library. Still in beta, Open Library Explorer is able to harness the Dewey Decimal or Library of Congress classification systems to recreate virtually the experience of browsing the bookshelves at a physical library. Open Library Explorer enables readers to scan bookshelves left to right by subject, up and down for subclassifications. Switch a filter and suddenly the bookshelves are full of juvenile books. Type in “subject: biography” and you see nothing but biographies arranged by subject matter.

Why recreate a physical library experience in your browser?

Now that classrooms and libraries are once again shuttered, families are turning online for their educational and entertainment needs. With demand for digital books at an all-time high, the Open Library team was inspired to give readers something closer to what they enjoy in the physical world. Something that puts the power of discovery back into the hands of patrons.

Escaping the Algorithmic Bubble

One problem with online platforms is the way they guide you to new content. For music, movies, or books, Spotify, Netflix and Amazon use complicated recommendation algorithms to suggest what you should encounter next. But those algorithms are driven by the media you have already consumed. They put you into a “filter bubble” where you only see books similar to those you’ve already read. Cami and his team devised the Open Library Explorer as an alternative to recommendation engines. With the Open Library Explorer, you are free to dive deeper and deeper into the stacks. Where you go is driven by you, not by an algorithm..

Zoom out to get an ever expanding view of your library

Change the setting to make your books 3D, so you can see just how thick each volume is.

Cool New Features

By clicking on the Settings gear, you can customize the look and feel of your shelves. Hit the 3D options and you can pick out the 600-page books immediately, just by the thickness of the spine. When a title catches your eye, click on the book to see whether Open Library has an edition you can preview or borrow. For more than 4 million books, borrowing a copy in your browser is just a few clicks away.

Ready to enter the library? Click here, and be sure to share feedback so the Open Library team can make it even better.

Download :https://github.com/Tw1ddle/geometrize/releases

Features

Recreate images as geometric primitives.

Start with hundreds of images with preset settings.

Export geometrized images to SVG, PNG, JPG, GIF and more.

Export geometrized images as HTML5 canvas or WebGL webpages.

Export shape data as JSON for use in custom projects and creations.

Control the algorithm at the core of Geometrize with ChaiScript scripts.The papers summarized here are mainly from 2017 onwards.

Please refer to the Survey paper(Image Aesthetic Assessment:An Experimental Survey) before 2016.

Optical illusions don’t “trick the eye” nor “fool the brain”, nor reveal that “our brain sucks”, … but are fascinating!

They also teach us about our visual perception, and its limitations. My selection emphazises beauty and interactive experiments; I also attempt explanations of the underlying visual mechanisms where possible.

Returning visitor? Check →here for History/News

»Optical illusion« sounds derogative, as if exposing a malfunction of the visual system. Rather, I view these phenomena as highlighting particular good adaptations of our visual system to its experience with standard viewing situations. These experiences are based on normal visual conditions, and thus under unusual contexts can lead to inappropriate interpretations of a visual scene (=“Bayesian interpretation of perception”).

If you are not a vision scientist, you might find my explanations too highbrow. That is not on purpose, but vision research simply is not trivial, like any science. So, if an explanation seems gibberish, simply enjoy the phenomenon 😉.

As an editorial and curatorial platform, HOLO explores disciplinary interstices and entangled knowledge as epicentres of critical creative practice, radical imagination, research, and activism

A showcase with creative machine learning experiments

Web scraping describes techniques for automatically downloading and processing web content, or converting online text and other media into structured data that can then be used for various purposes. In short, the user writes a program to browse and analyze the web on their behalf, rather than doing so manually. This is a common practice in silicon valley, where open html pages are transformed into private property: Facebook began as a (horny) web scraping project, as did Google and all other search engines. Web scraping is also frequently used to acquire the massive datasets needed to train machine learning models, and has become an important research tool in fields such as journalism and sociology.

I define "scrapism" as the practice of web scraping for artistic, emotional, and critical ends. It combines aspects of data journalism, conceptual art, and hoarding, and offers a methodology to make sense of a world in which everything we do is mediated by internet companies. These companies surveill us, vacuum up every trace we leave behind, exploit our experiences and interject themselves into every possible moment. But in turn they also leave their own traces online, traces which when collected, filtered, and sorted can reveal (and possibly even alter) power relations. The premise of scrapism is that everything we need to know about power is online, hiding in plain sight.

This is a work-in-progress guide to web scraping as an artistic and critical practice, created by Sam Lavigne. I will be updating it over the coming months! I'll also be doing occasional live demos either on Twitch or YoutTube.

Creative Disturbance is an international, multilingual network and podcast platform supporting collaboration among the arts, sciences, and new technologies communities.

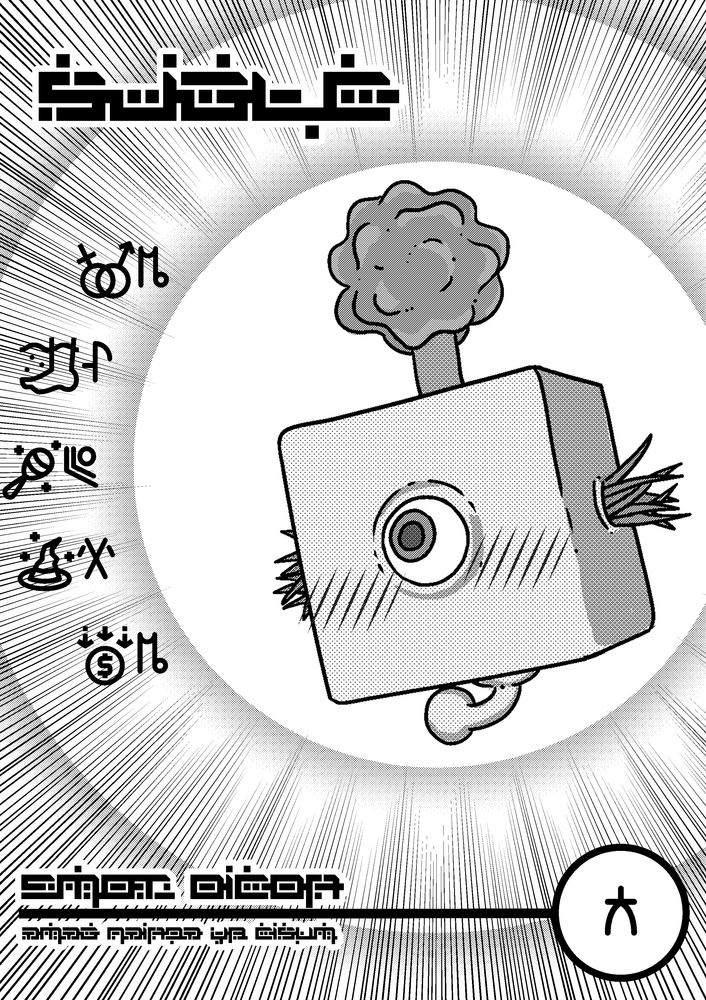

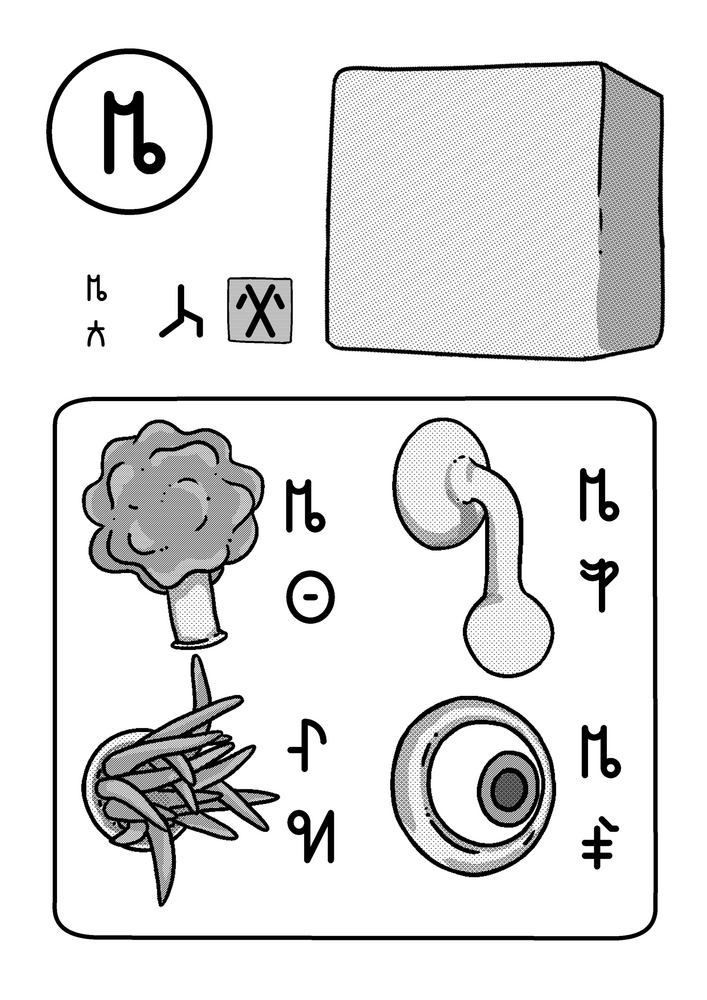

Conceptual comics is an archive of works that are unaffiliated with the commonly accepted history of the comics medium. It is a resonating chamber for conceptual works and unconventional practices that are little known outside of our community but also a springboard for establishing the conditions for an affective lineage between similarly minded practitioners. The variety of the collected material expresses the curator’s choice for a non uniform consistency and claim instead for a perpetual becoming of the medium. Nevertheless, these works share with each other many common issues and urgencies, alternating between material self-reflexivity and critical exhaustion. They operate on the margins of distribution and reception and their unlocatedness in the medium's spectrum is more than an abstraction: artists uncomfortable with the entrenched roles invite readers, in the absence of critical discourse, to engage with the works in non-specified, at times forensic, ways of examination. I argue that this condition, more than a minor drawback of a normative industry, induces new behaviours and forms of social relationships. Each of the works that are featured in this collection explores the very substrate of its medium not as a culturally neutral site, but as a way to build alternative historiographies, replete with its own material properties and signifying potentials. They propose to examine how social and economic forces and their related sets of activities and commercial, communicative and other routines compose the media’s meaning-signifying trajectory. The rainforest of pulp production, the printer’s studio, the readers’ column and the landfill are not simply the industry's geographies but are technologies of inscription in their own right. They are the integral elements of a material language that actively shapes the medium and challenges the reader to negotiate meaning through different distributions of transparency over opacity in its products. This collection proposes to equally embrace the real, the unclaimed, the anticipated and the fictional practices, in their constant materialisation, and reflect on their specific sites of production in their potential to register meaning and organise discourse based on the inscriptions of this material language.

About Ilan Manouach

Ilan David Manouach is a researcher and a multidisciplinary artist with a specific interest in conceptual and post-digital art. He currently holds a PhD position at the Aalto University in Helsinki (adv. Craig Dworkin) where he examines the intersections of contemporary graphic literature and XXIst century’s technological disruptions. He is mostly known for Shapereader, a system for tactile storytelling specifically designed for blind and partially sighted readers/makers of comics. He is also the founder and creative director of Applied Memetic an organization that researches the political repercussions of generative art and highlights the urgency for a new media-rich internet literacy. His work has been written about in Hyperallergic, World Literature Today, Wired, Le Monde, The Comics Journal, du9, 50 Watts and Kenneth Goldsmith’s Wasting Time on the Internet. For a fuller documentation on the above projects, the Brussels-based non-profit Echo Chamber is responsible for producing, fundraising, documenting and archiving Manouach’s research on contemporary comics, that has been presented in solo exhibitions to important festivals, museums and galleries worldwide. He is an Onassis Digital Fellow and a Kone alumnus and he works as a strategy consultant for the Onassis Foundation visibility through its newly founded publishing arm.

Commonspoly is a free licensed board game which promotes cooperation and the act of commoning.

Orca is an esoteric programming language designed by @hundredrabbits to create procedural sequencers.

This playground lets you use Orca and its companion app Pilot directly in the browser and allows you to publish your creations by sharing their URL.

Table of Contents

Creative Coding History

Modern Creative Coding Uses

Graphics Concepts

Creative Coding Environments and Libraries

Communication Protocols

Multimedia Tools

Unique Displays and Touchscreens

Hardware

Other output options

More resourcesOriginally captured as the medium for Ed Ruscha’s creative work, the more than 65,000 photographs selected from this archive present a unique view of one of Los Angeles’ quintessential streets, Sunset Boulevard, and how it has changed over the past 50 years. Ed Ruscha, with help from Getty and Stamen Design, is making this amazing collection accessible to you: explore his images of Sunset and discover your own story of Los Angeles.

Shrub is a tool for painting-and-traveling, and even for painting while moving your own body (for example to use the color of your own pants).

If you touch with two fingers, you can immediately send your drawing as an SMS message. Shrub is designed as a mobile communication tool as much as a mobile drawing tool.

More pro tips: For the best drawings, pinch with your fingers to change the brush size. Twist with your fingers to change the brush softness. And of course, tap with one finger to show and hide the viewfinder.

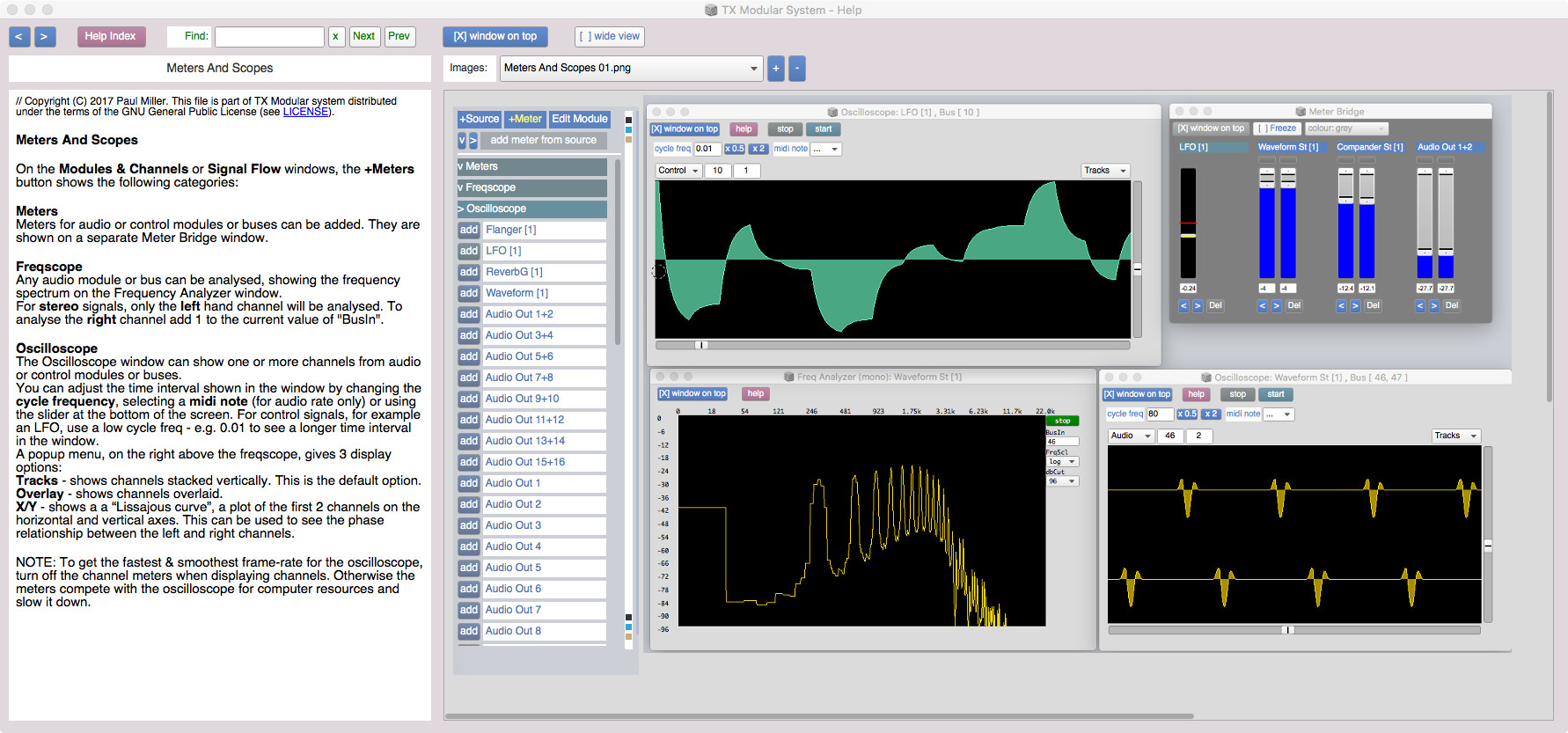

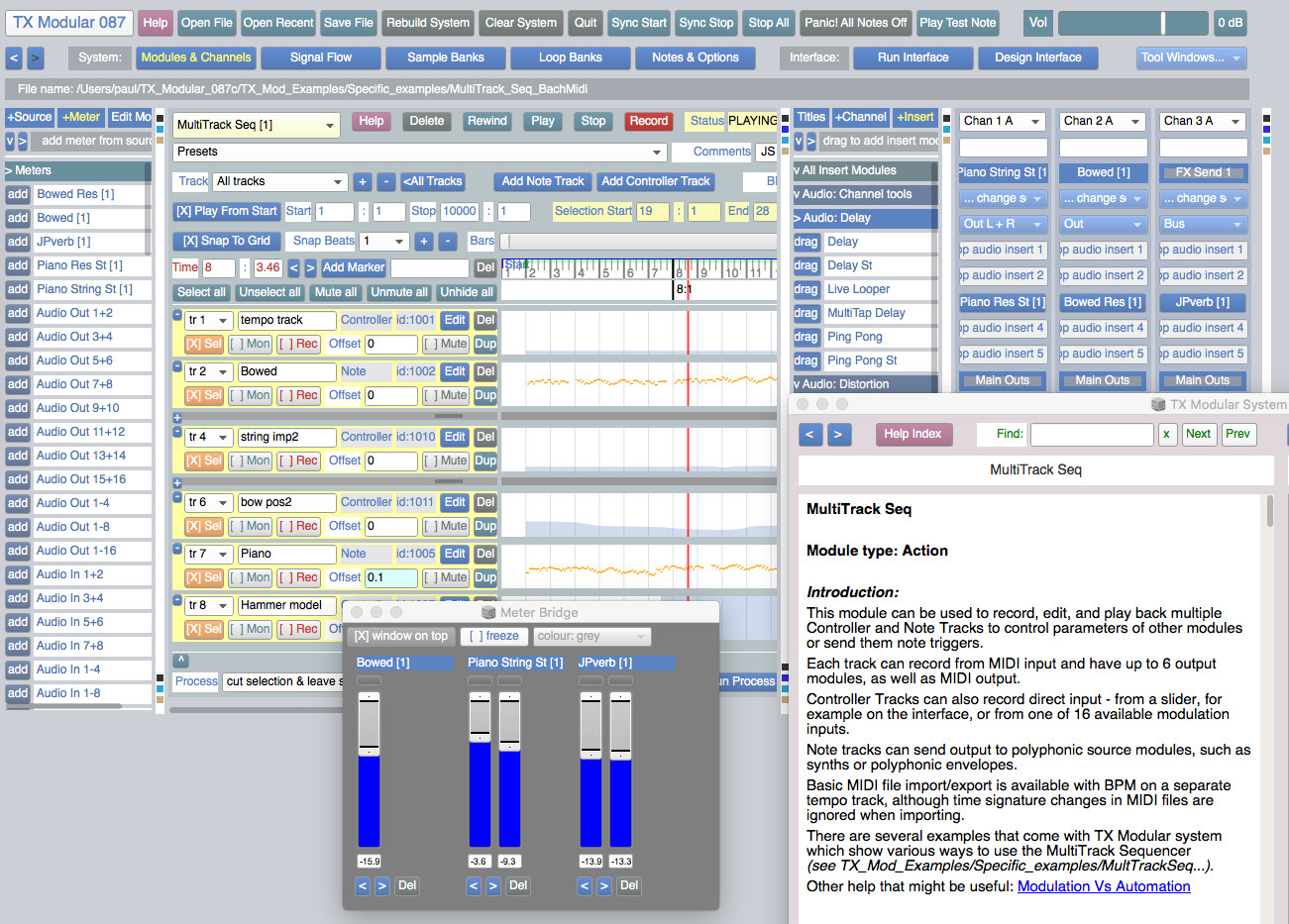

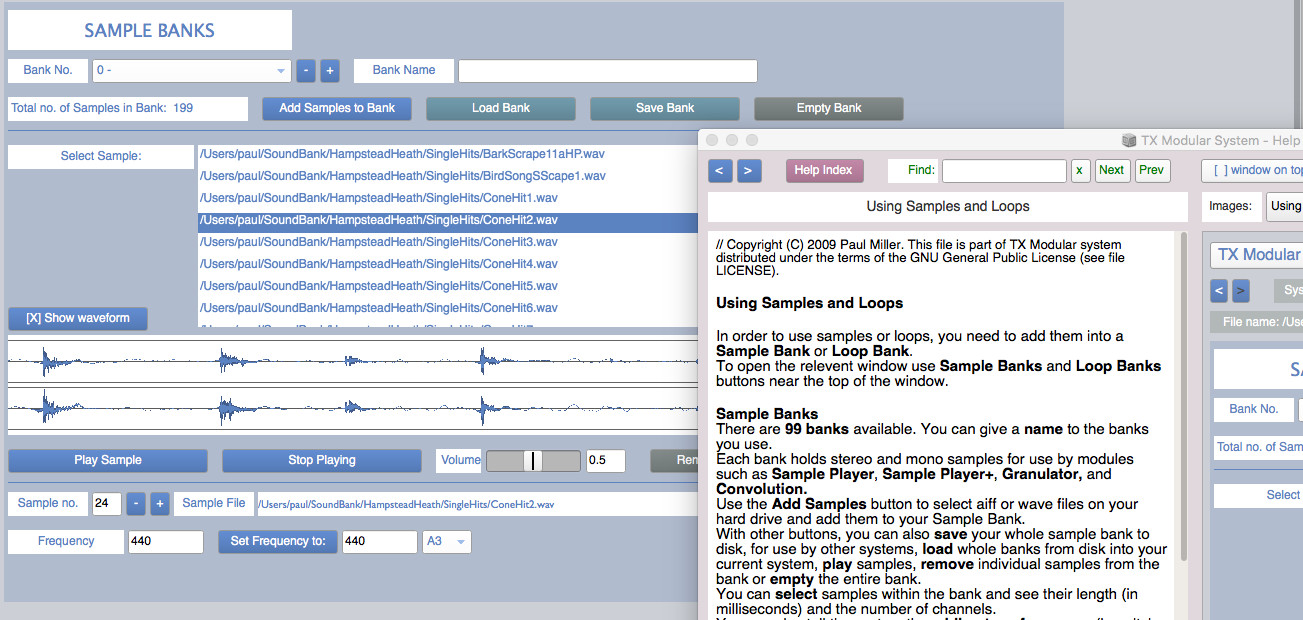

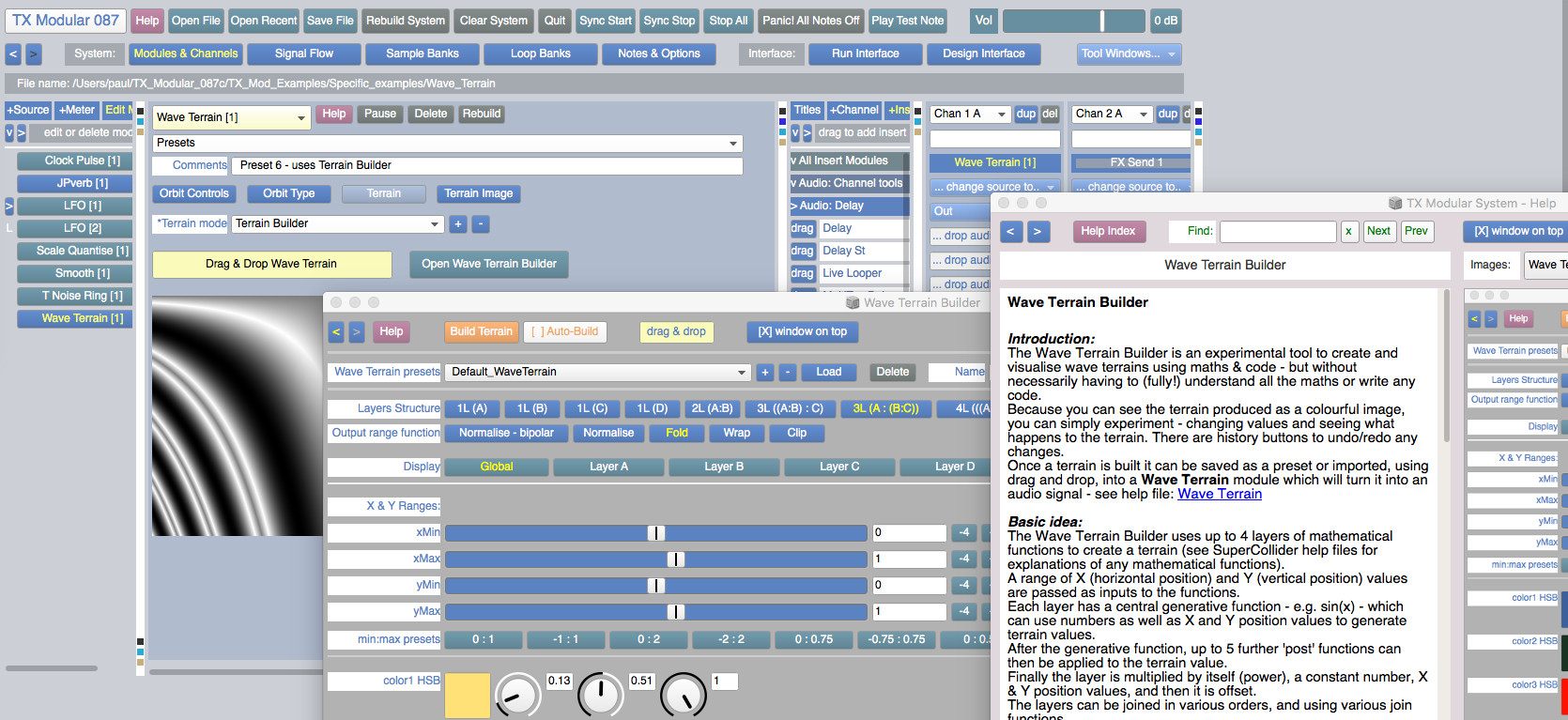

The TX Modular System is open source audio-visual software for modular synthesis and video generation, built with SuperCollider (https://supercollider.github.io) and openFrameworks (https://openFrameworks.cc).

It can be used to build interactive audio-visual systems such as: digital musical instruments, interactive generative compositions with real-time visuals, sound design tools, & live audio-visual processing tools.

This version has been tested on MacOS (0.10.11) and Windows (10). The audio engine should also work on Linux.

The visual engine, TXV, has only been built so far for MacOS and Windows - it is untested on Linux.

The current TXV MacOS build will only work with Mojave (10.14) or earlier (10.11, 10.12 & 10.13) - but NOT Catalina (10.15) or later.

You don't need to know how to program to use this system. But if you can program in SuperCollider, some modules allow you to edit the SuperCollider code inside - to generate or process audio, add modulation, create animations, or run SuperCollider Patterns.

Comprehensive overview of existing tools, strategies and thoughts on interacting with your data

TLDR: when I read I try to read actively, which for me mainly involves using various tools to annotate content: highlight and leave notes as I read. I've programmed data providers that parse them and provide nice interface to interact with this data from other tools. My automated scripts use them to render these annotations in human readable and searchable plaintext and generate TODOs/spaced repetition items.

In this post I'm gonna elaborate on all of that and give some motivation, review of these tools (mainly with the focus on open source thus extendable software) and my vision on how they could work in an ideal world. I won't try to convince you that my method of reading and interacting with information is superior for you: it doesn't have to be, and there are people out there more eloquent than me who do that. I assume you want this too and wondering about the practical details.

This database* is an ongoing project to aggregate tools and resources for artists, engineers, curators & researchers interested in incorporating machine learning (ML) and other forms of artificial intelligence (AI) into their practice. Resources in the database come from our partners and network; tools cover a broad spectrum of possibilities presented by the current advances in ML like enabling users to generate images from their own data, create interactive artworks, draft texts or recognise objects. Most of the tools require some coding skills, however, we’ve noted ones that don’t. Beginners are encouraged to turn to RunwayML or entries tagged as courses.

*This database isn’t comprehensive—it's a growing collection of research commissioned & collected by the Creative AI Lab. The latest tools were selected by Luba Elliott. Check back for new entries.

Via : https://docs.google.com/document/d/1TkusCE5mS4tuTYoBwaTV4aJKdSVsf9jFKsGJCx8M05c/edit

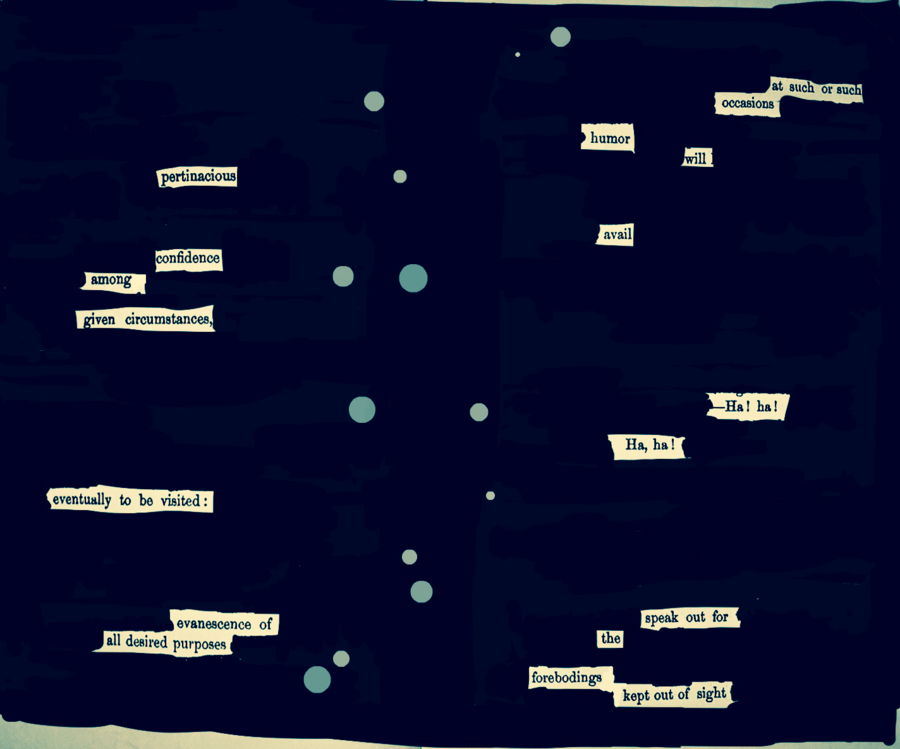

Blackout poetry is made by colouring over parts of an existing text, so that only selected words remain visible, creating a poem.

To use this tool, you can select a text from the samples, or paste your own text source into the custom text field. Your chosen text will appear in the large box to the right.

With your mouse or touchscreen, select the words from the text you want to keep, and, when you are ready, press the black out button.

If you want to save the result as an image, maybe to post to your social network of choice, scroll down and hit Render as image. You can then save the image directly to your device.